Artificial intelligence fakes in the adult content space: the genuine threats ahead

Sexualized deepfakes and clothing removal images remain now cheap to generate, difficult to trace, yet devastatingly credible at first glance. Such risk isn’t abstract: AI-powered undressing applications and internet nude generator services are being employed for intimidation, extortion, along with reputational damage on scale.

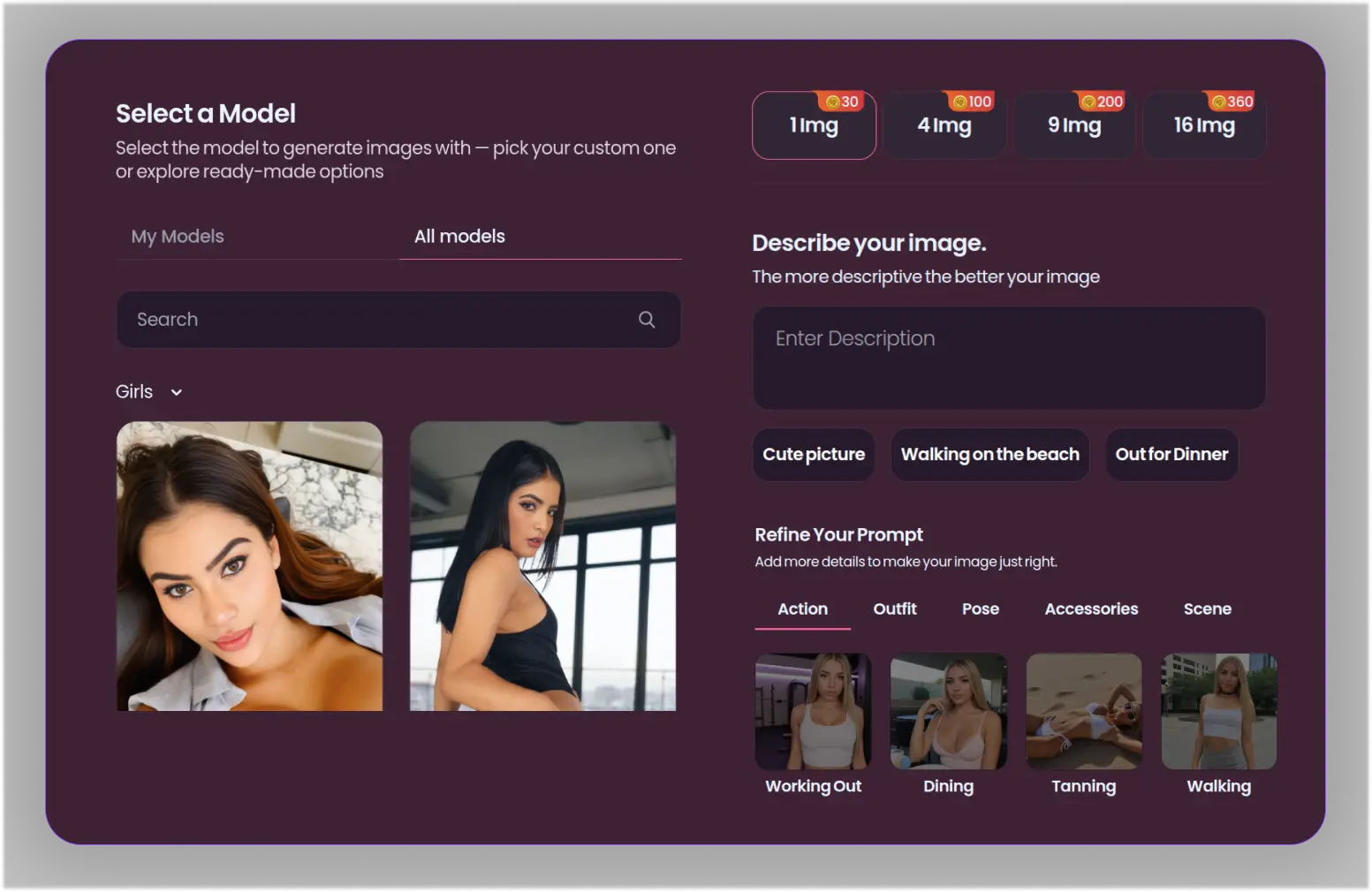

Current market moved far beyond the initial Deepnude app period. Today’s adult AI applications—often branded under AI undress, artificial intelligence Nude Generator, and virtual “AI models”—promise realistic nude images from a single photo. Even when their output isn’t ideal, it’s convincing adequate to trigger distress, blackmail, and community fallout. Throughout platforms, people encounter results from brands like N8ked, DrawNudes, UndressBaby, AINudez, explicit generators, and PornGen. These tools differ through speed, realism, plus pricing, but this harm pattern is consistent: non-consensual media is created and spread faster than most victims are able to respond.

Addressing this requires two parallel abilities. First, develop to spot multiple common red signals that betray artificial intelligence manipulation. Second, keep a response plan that prioritizes evidence, fast reporting, plus safety. What comes next is a actionable, experience-driven playbook utilized by moderators, security teams, and digital forensics practitioners.

How dangerous have NSFW deepfakes become?

Accessibility, realism, and amplification combine to raise the risk level. The “undress application” category is remarkably simple, and online platforms can distribute a single manipulated image to thousands of viewers before a removal lands.

Low resistance is the main issue. A one selfie can get scraped from the profile and processed into a garment Removal Tool in minutes; some systems even automate batches. Quality is unpredictable, but extortion won’t require photorealism—only believability and shock. External coordination discover this ainudezundress.com in group chats and data dumps further increases reach, and numerous hosts sit beyond major jurisdictions. This result is one whiplash timeline: production, threats (“give more or we post”), and circulation, often before the target knows how to ask for help. That renders detection and instant triage critical.

Red flag checklist: identifying AI-generated undress content

Most undress deepfakes share repeatable signs across anatomy, realistic behavior, and context. Users don’t need professional tools; train your eye on characteristics that models consistently get wrong.

First, search for edge artifacts and boundary problems. Clothing lines, ties, and seams often leave phantom marks, with skin looking unnaturally smooth where fabric should would have compressed it. Adornments, especially neck accessories and earrings, might float, merge with skin, or disappear between frames within a short video. Tattoos and marks are frequently gone, blurred, or misaligned relative to base photos.

Second, scrutinize lighting, shadows, and reflections. Shaded regions under breasts or along the chest can appear airbrushed or inconsistent with the scene’s illumination direction. Reflections in mirrors, windows, plus glossy surfaces could show original attire while the primary subject appears “undressed,” a high-signal inconsistency. Specular highlights across skin sometimes mirror in tiled sequences, a subtle AI fingerprint.

Third, check texture believability and hair behavior. Skin pores could look uniformly artificial, with sudden detail changes around chest torso. Body fur and fine flyaways around shoulders plus the neckline commonly blend into surroundings background or have haloes. Strands meant to should overlap skin body may get cut off, one legacy artifact of segmentation-heavy pipelines utilized by many strip generators.

Additionally, assess proportions plus continuity. Suntan lines may be absent or synthetically applied on. Breast form and gravity might mismatch age and posture. Touch points pressing into the body should compress skin; many fakes miss this small deformation. Clothing remnants—like a sleeve edge—may imprint within the “skin” via impossible ways.

Fifth, read the contextual context. Crops frequently to avoid difficult regions such as body joints, hands on body, or where garments meets skin, masking generator failures. Environmental logos or text may warp, and EXIF metadata gets often stripped or shows editing tools but not any claimed capture camera. Reverse image search regularly reveals the source photo dressed on another platform.

Sixth, examine motion cues if it’s video. Respiratory movement doesn’t move upper torso; clavicle plus rib motion delay behind the audio; while physics of accessories, necklaces, and clothing don’t react with movement. Face swaps sometimes blink during odd intervals compared with natural normal blink rates. Environment acoustics and sound resonance can contradict the visible environment if audio became generated or lifted.

Seventh, examine duplicates and symmetry. AI loves symmetry, thus you may find repeated skin imperfections mirrored across body body, or same wrinkles in fabric appearing on both sides of photo frame. Background textures sometimes repeat through unnatural tiles.

Next, look for user behavior red warning signs. New profiles with minimal history that unexpectedly post NSFW “leaks,” aggressive DMs seeking payment, or suspicious storylines about when a “friend” got the media suggest a playbook, instead of authenticity.

Ninth, focus on consistency throughout a set. If multiple “images” of the same subject show varying anatomical features—changing moles, disappearing piercings, or different room details—the probability you’re dealing facing an AI-generated collection jumps.

What’s your immediate response plan when deepfakes are suspected?

Preserve evidence, keep calm, and operate two tracks in once: removal plus containment. The first 60 minutes matters more than the perfect response.

Initiate with documentation. Take full-page screenshots, complete URL, timestamps, usernames, along with any IDs from the address bar. Keep original messages, containing threats, and record screen video showing show scrolling background. Do not modify the files; store them in one secure folder. If extortion is occurring, do not provide payment and do never negotiate. Blackmailers typically escalate post payment because such action confirms engagement.

Next, trigger platform along with search removals. Report the content under “non-consensual intimate content” or “sexualized deepfake” if available. File intellectual property takedowns if this fake uses your likeness within a manipulated derivative of your photo; several hosts accept these even when the claim is challenged. For ongoing protection, use a hash-based service like StopNCII to create digital hash of intimate intimate images plus targeted images) so participating platforms will proactively block future uploads.

Inform reliable contacts if the content targets your social circle, workplace, or school. One concise note explaining the material is fabricated and currently addressed can reduce gossip-driven spread. When the subject remains a minor, halt everything and alert law enforcement at once; treat it regarding emergency child abuse abuse material management and do never circulate the file further.

Lastly, consider legal routes where applicable. Depending on jurisdiction, victims may have legal grounds under intimate image abuse laws, impersonation, harassment, libel, or data privacy. A lawyer plus local victim assistance organization can advise on urgent legal remedies and evidence requirements.

Removal strategies: comparing major platform policies

The majority of major platforms block non-consensual intimate imagery and deepfake porn, but policies and workflows differ. Act quickly and file on every surfaces where the content appears, including mirrors and short-link hosts.

| Platform | Policy focus | Reporting location | Processing speed | Notes |

|---|---|---|---|---|

| Meta platforms | Unauthorized intimate content and AI manipulation | App-based reporting plus safety center | Hours to several days | Supports preventive hashing technology |

| Twitter/X platform | Unauthorized explicit material | User interface reporting and policy submissions | Inconsistent timing, usually days | May need multiple submissions |

| TikTok | Explicit abuse and synthetic content | Built-in flagging system | Rapid response timing | Blocks future uploads automatically |

| Unauthorized private content | Report post + subreddit mods + sitewide form | Inconsistent timing across communities | Request removal and user ban simultaneously | |

| Independent hosts/forums | Terms prohibit doxxing/abuse; NSFW varies | Abuse@ email or web form | Highly variable | Leverage legal takedown processes |

Your legal options and protective measures

Current law is catching up, and you likely have greater options than one think. You won’t need to demonstrate who made such fake to seek removal under many regimes.

In the UK, sharing pornographic deepfakes without authorization is a illegal offense under existing Online Safety legislation 2023. In EU region EU, the AI Act requires marking of AI-generated content in certain situations, and privacy laws like GDPR facilitate takedowns where using your likeness misses a legal justification. In the US, dozens of regions criminalize non-consensual explicit material, with several including explicit deepfake provisions; civil claims for defamation, intrusion upon seclusion, or right of publicity often apply. Many countries also offer quick injunctive protection to curb distribution while a lawsuit proceeds.

If an undress picture was derived via your original photo, copyright routes may help. A DMCA notice targeting such derivative work and the reposted base often leads into quicker compliance with hosts and web engines. Keep all notices factual, prevent over-claiming, and reference the specific links.

Where platform enforcement stalls, escalate with follow-up submissions citing their stated bans on “AI-generated explicit material” and “non-consensual private imagery.” Continued effort matters; multiple, comprehensive reports outperform single vague complaint.

Risk mitigation: securing your digital presence

You can’t eliminate risk entirely, but you can reduce exposure and enhance your leverage if a problem begins. Think in concepts of what can be scraped, how it can be remixed, and how fast you are able to respond.

Secure your profiles by limiting public high-resolution images, especially direct, well-lit selfies that strip tools prefer. Explore subtle watermarking within public photos while keep originals stored so you will prove provenance while filing takedowns. Examine friend lists plus privacy settings on platforms where random people can DM plus scrape. Set create name-based alerts on search engines plus social sites when catch leaks promptly.

Create an evidence package in advance: some template log for URLs, timestamps, and usernames; a secure cloud folder; along with a short explanation you can give to moderators explaining the deepfake. If you manage business or creator profiles, consider C2PA Content Credentials for fresh uploads where supported to assert authenticity. For minors within your care, secure down tagging, block public DMs, plus educate about sextortion scripts that start with “send some private pic.”

At work or educational settings, identify who oversees online safety concerns and how quickly they act. Establishing a response path reduces panic plus delays if someone tries to distribute an AI-powered “realistic nude” claiming it’s your image or a coworker.

Did you know? Four facts most people miss about AI undress deepfakes

The majority of deepfake content across the internet remains sexualized. Various independent studies from the past several years found where the majority—often exceeding nine in every ten—of detected AI-generated content are pornographic along with non-consensual, which corresponds with what services and researchers see during takedowns. Hashing works without posting your image openly: initiatives like blocking platforms create a digital fingerprint locally plus only share such hash, not original photo, to block additional postings across participating websites. EXIF metadata rarely assists once content gets posted; major services strip it on upload, so avoid rely on metadata for provenance. Content provenance standards continue gaining ground: verification-enabled “Content Credentials” may embed signed modification history, making it easier to demonstrate what’s authentic, but adoption is currently uneven across consumer apps.

Ready-made checklist to spot and respond fast

Pattern-match for the key tells: boundary irregularities, brightness mismatches, texture plus hair anomalies, proportion errors, context mismatches, motion/voice mismatches, mirrored repeats, suspicious profile behavior, and variation across a collection. When you find two or additional, treat it like likely manipulated and switch to reaction mode.

Capture evidence without resharing the file broadly. Flag content on every website under non-consensual intimate imagery or explicit deepfake policies. Employ copyright and personal rights routes in simultaneously, and submit a hash to trusted trusted blocking provider where available. Contact trusted contacts using a brief, factual note to prevent off amplification. When extortion or children are involved, escalate to law authorities immediately and refuse any payment and negotiation.

Beyond all, act fast and methodically. Undress generators and web-based nude generators count on shock plus speed; your strength is a measured, documented process which triggers platform systems, legal hooks, and social containment as a fake may define your story.

For clarity: references to brands like various services including N8ked, DrawNudes, UndressBaby, explicit AI tools, Nudiva, and similar generators, and similar machine learning undress app plus Generator services stay included to outline risk patterns but do not support their use. This safest position remains simple—don’t engage with NSFW deepfake production, and know methods to dismantle it when it involves you or people you care for.